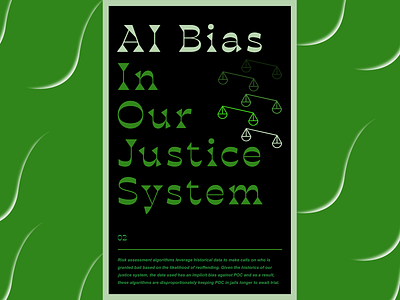

AI Bias in our Justice System

Getting a bit more granular into some examples of AI Biases - let's talk about Risk assessment algorithms. Risk assessment algorithms can be dated back to 2002. The goal of these algorithms was to take the place of cash bail given varying socio-economic statuses of those detained. These algorithms are created leveraging court systems data culminated from past arrest records with information including race, age, pending charges, etc. In some cases, these algorithms have been known to over estimate the risk of repeat offenses by black defendants meaning they are not given bail while bail is given to white defendants. In some cases, the white defendants that are given bail also end up being repeat offenders. Ultimately it is the judge's decision on whether to go the route of the algorithm or personal judgement. Regardless, it's a slippery slope - depend on the opinion of an individual who may have a bias or an AI that may also have a bias? We as people need to work on bettering ourselves and if we are to leverage these AI tools, we need our machines to be better as well.